I'm currently a Staff Research Scientist and Tech Lead at Meta.

.

I obtained my Ph.D. degree from

.

Before starting my Ph.D. study in HKUST in Sept. 2016, I obtained my bachelor’s degree from Fudan University in June. 2016.

My research focuses on improving the efficiency and deployability of foundation models through architectural optimization, low-bit quantization, sparsity and etc. Specifically, I am interested in using deep learning to solve the practical problems in the industry such as the limitation of insufficient resources and a trade-off between computation and accuracy. My research focus is mainly on:

|

|

Changsheng Zhao*, Ernie Chang*, Zechun Liu*†, Chia-Jung Chang, Wei Wen, Chen Lai, Rick Cao, Yuandong Tian, Raghuraman Krishnamoorthi, Yangyang Shi, Vikas Chandra

† Corresponding Author and Research Lead

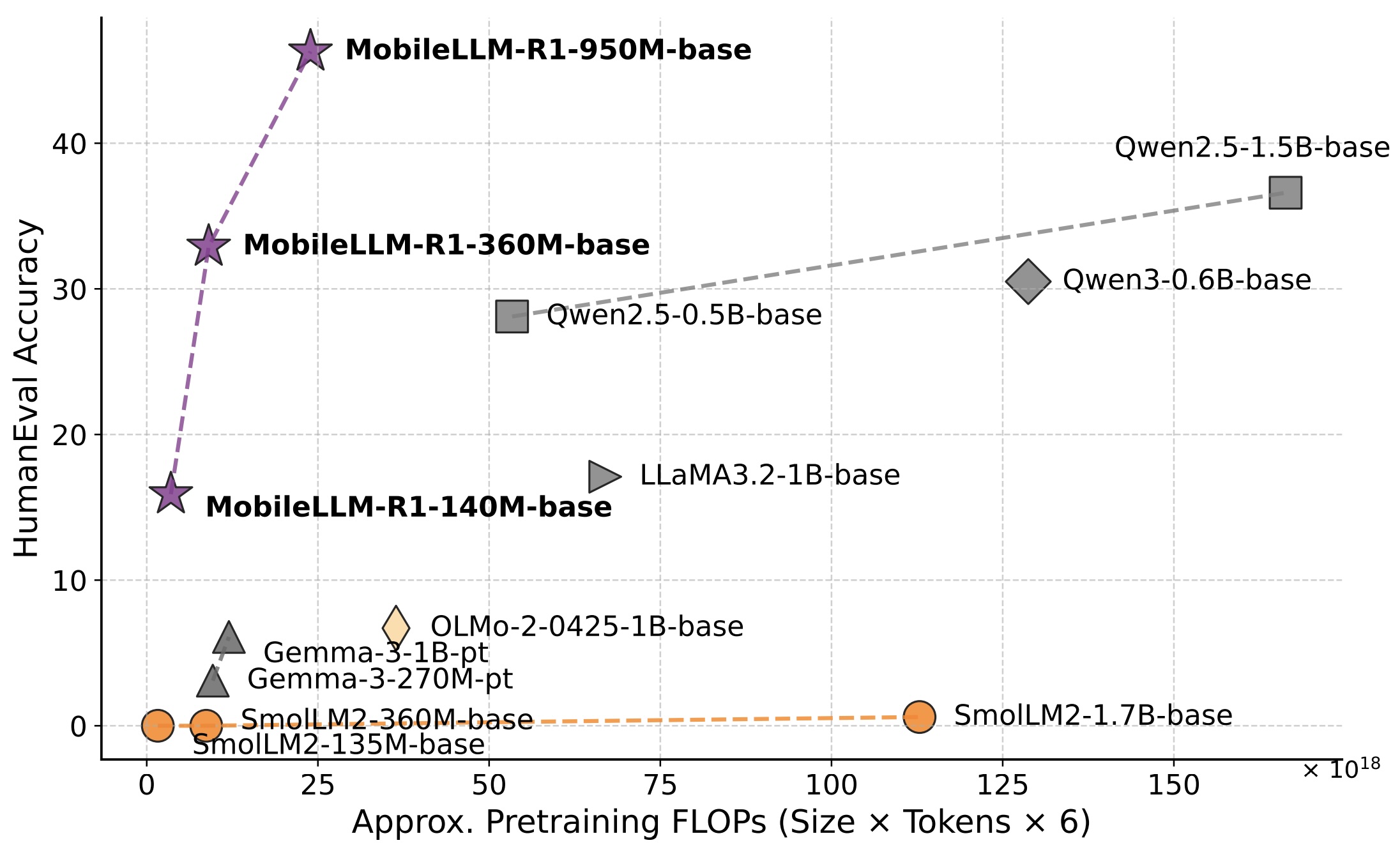

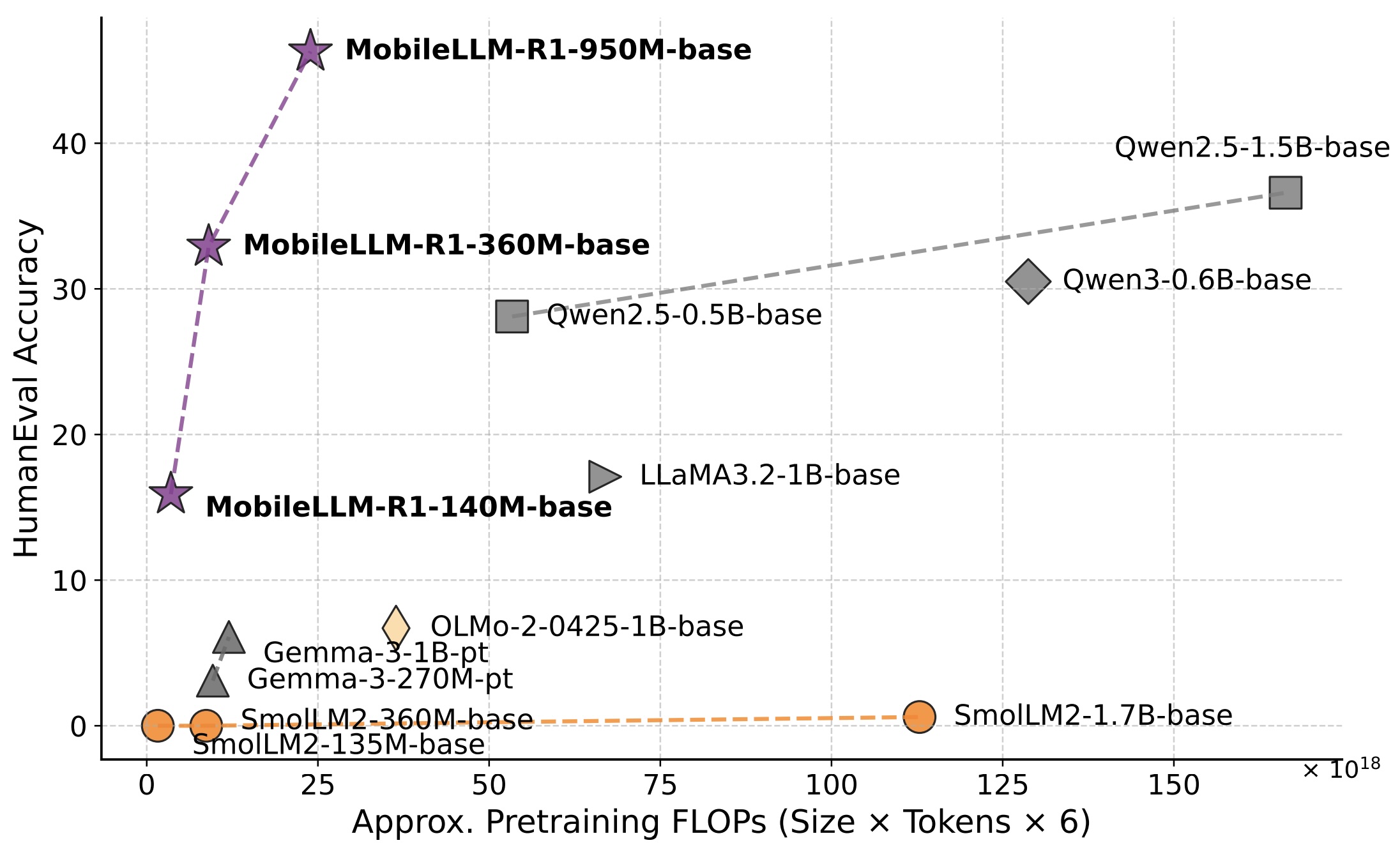

MobileLLM-R1: Exploring the Limits of Sub-Billion Language Model Reasoners with Open Training Recipes

Paper |

Models |

Code

| |

|

|

|

Zechun Liu, Changsheng Zhao, Hanxian Huang, Sijia Chen, Jing Zhang, Jiawei Zhao, Scott Roy, Lisa Jin, Yunyang Xiong, Yangyang Shi, Lin Xiao, Yuandong Tian, Bilge Soran, Raghuraman Krishnamoorthi, Tijmen Blankevoort, Vikas Chandra

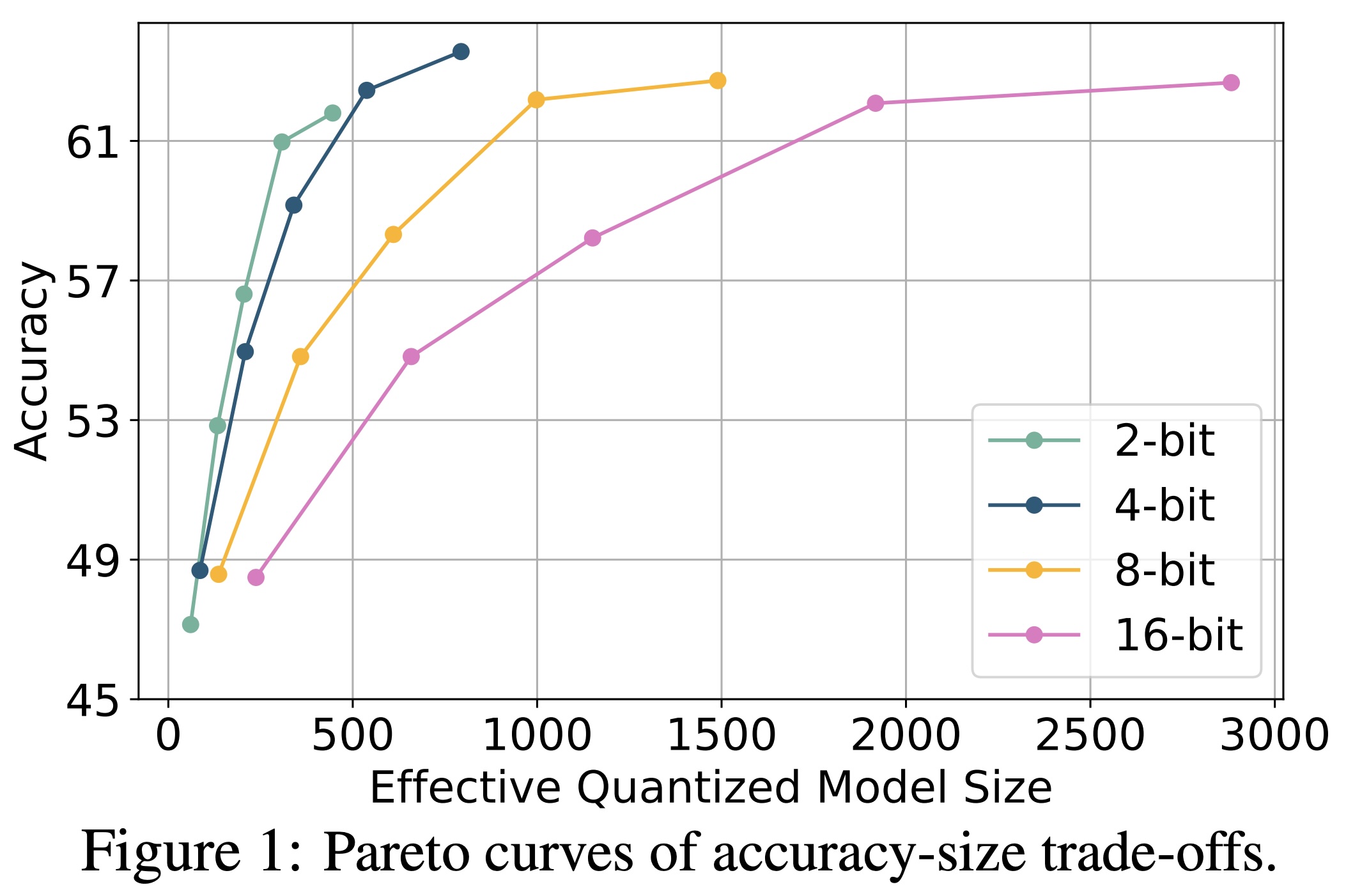

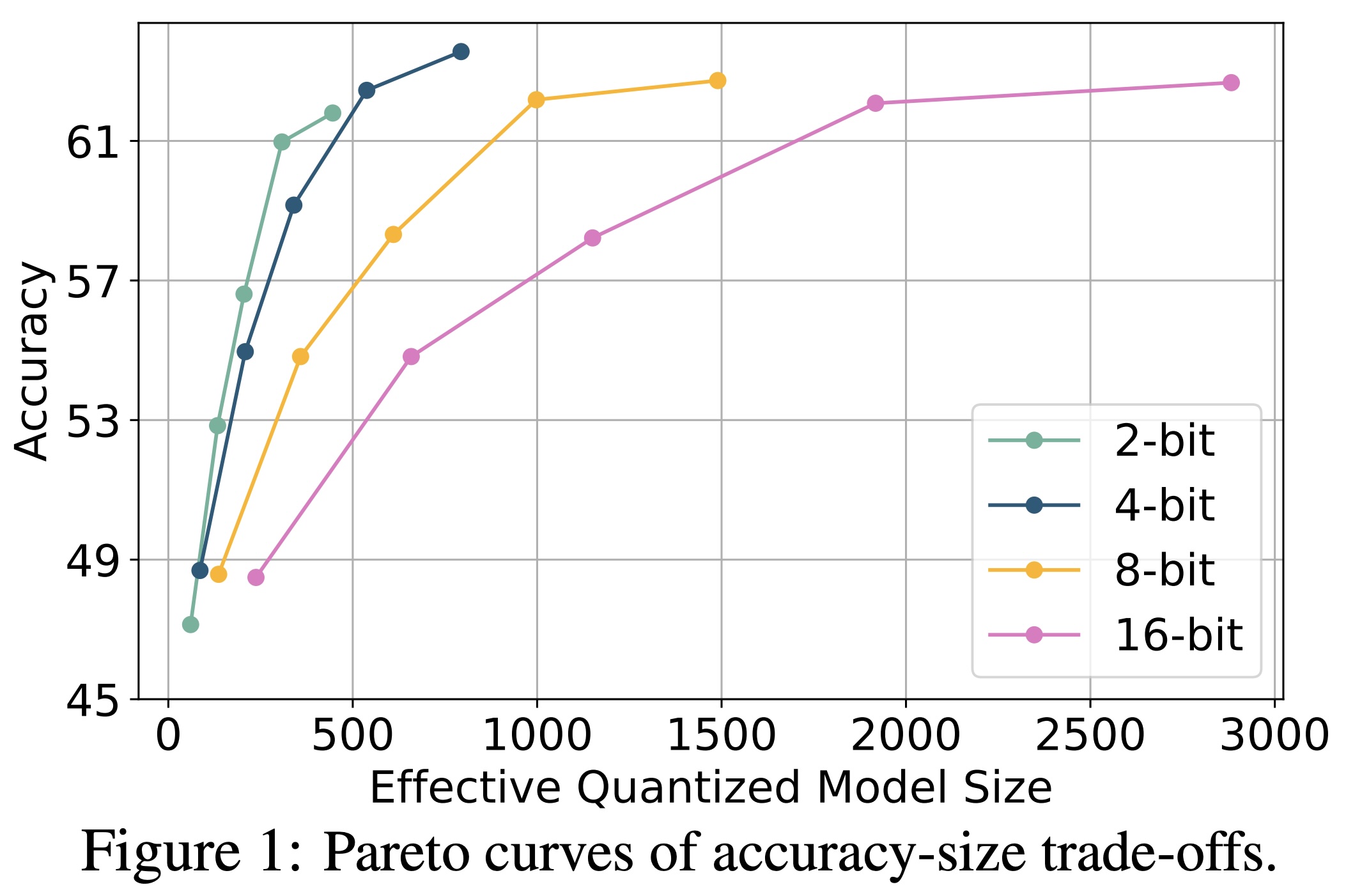

ParetoQ: Scaling Laws in Extremely Low-Bit LLM Quantization

Paper |

Code |

Models

| |

|

|

|

|

|

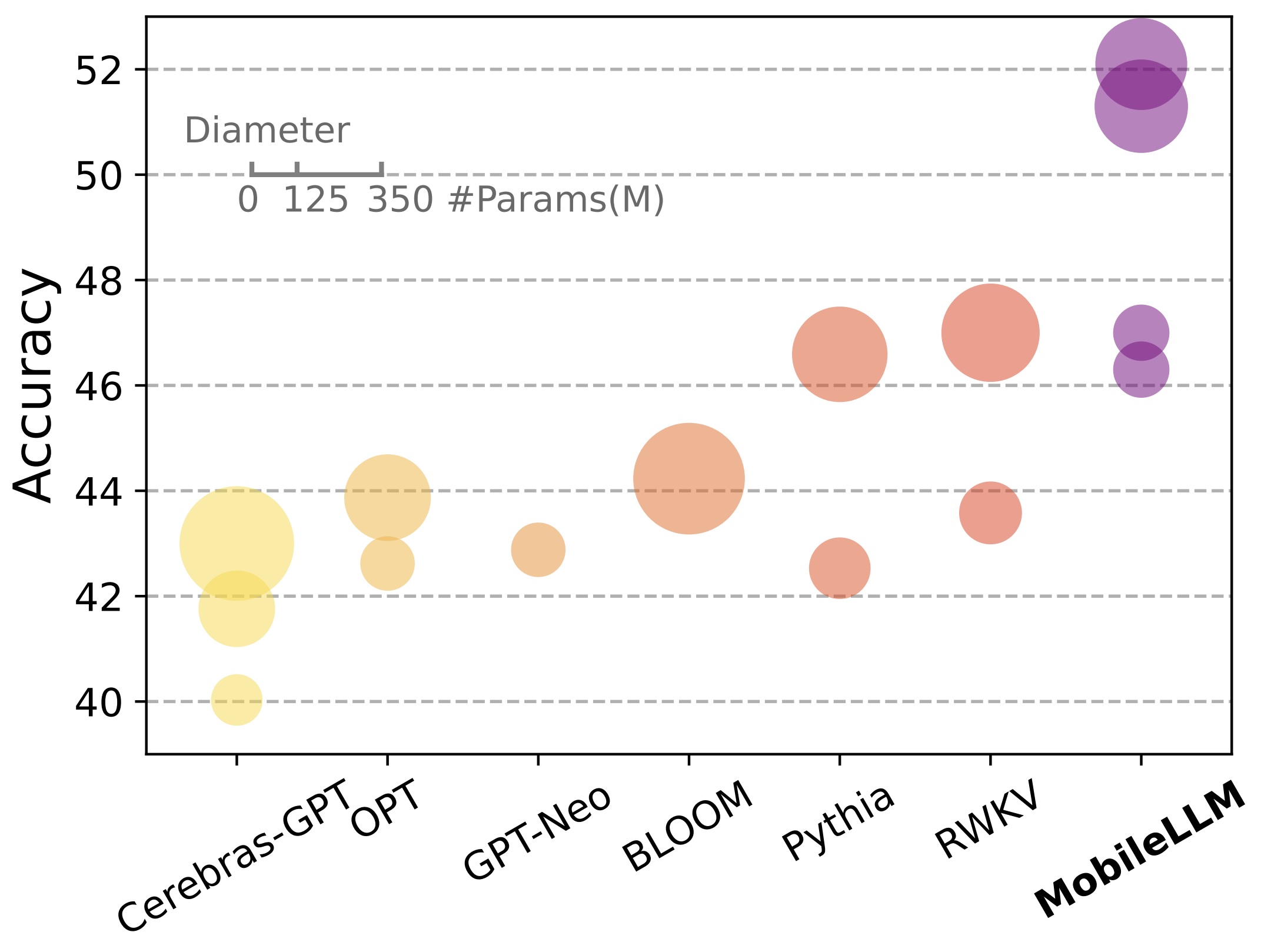

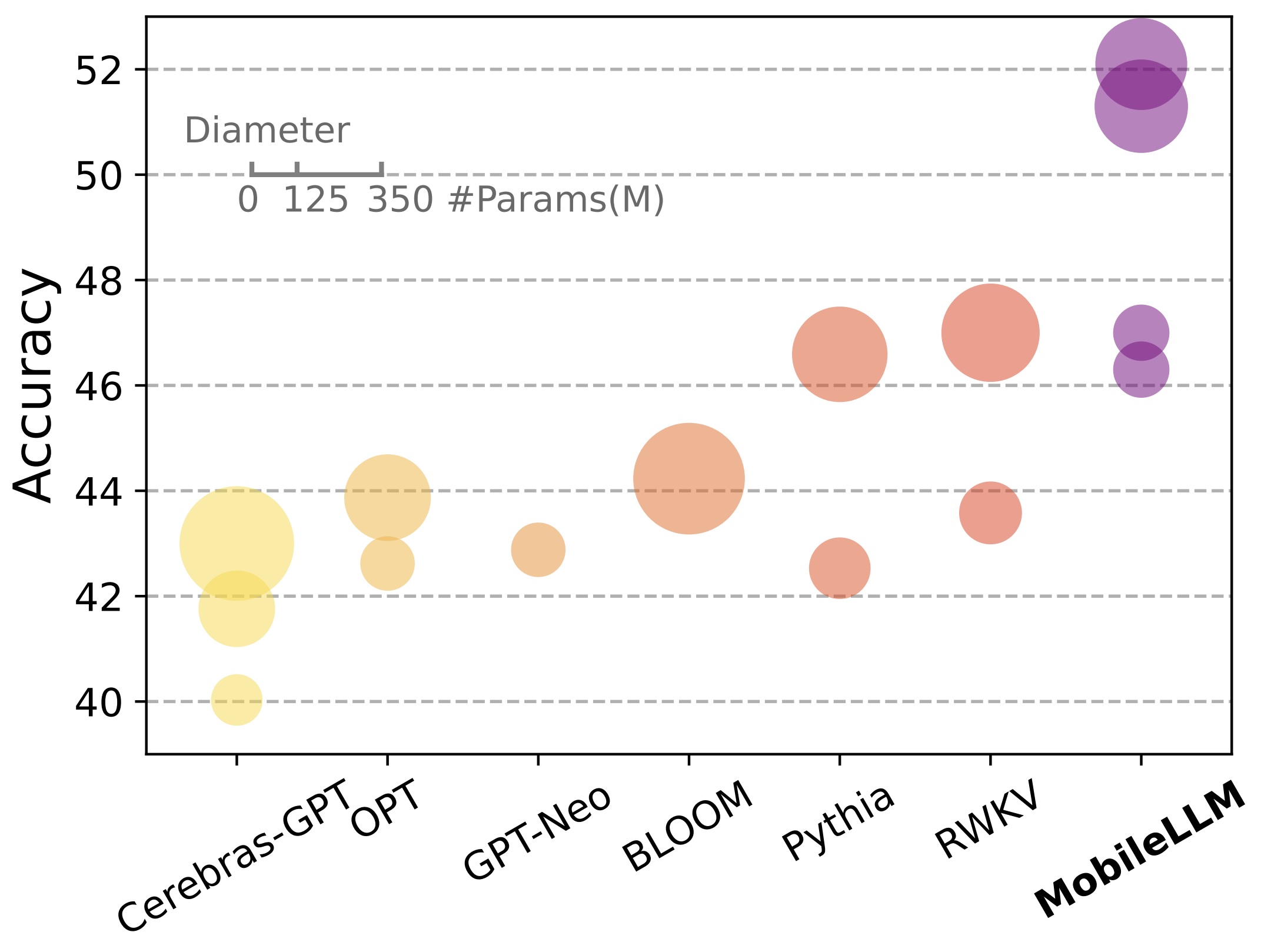

Zechun Liu, Changsheng Zhao, Forrest Iandola, Chen Lai, Yuandong Tian, Igor Fedorov, Yunyang Xiong, Ernie Chang, Yangyang Shi, Raghuraman Krishnamoorthi, Liangzhen Lai, Vikas Chandra

MobileLLM: Optimizing Sub-Billion Parameter Language Models for On-Device Use Cases

Forty-first International Conference on Machine Learning, 2024.

Paper |

Code |

Models

| |

|

|

|

|

|

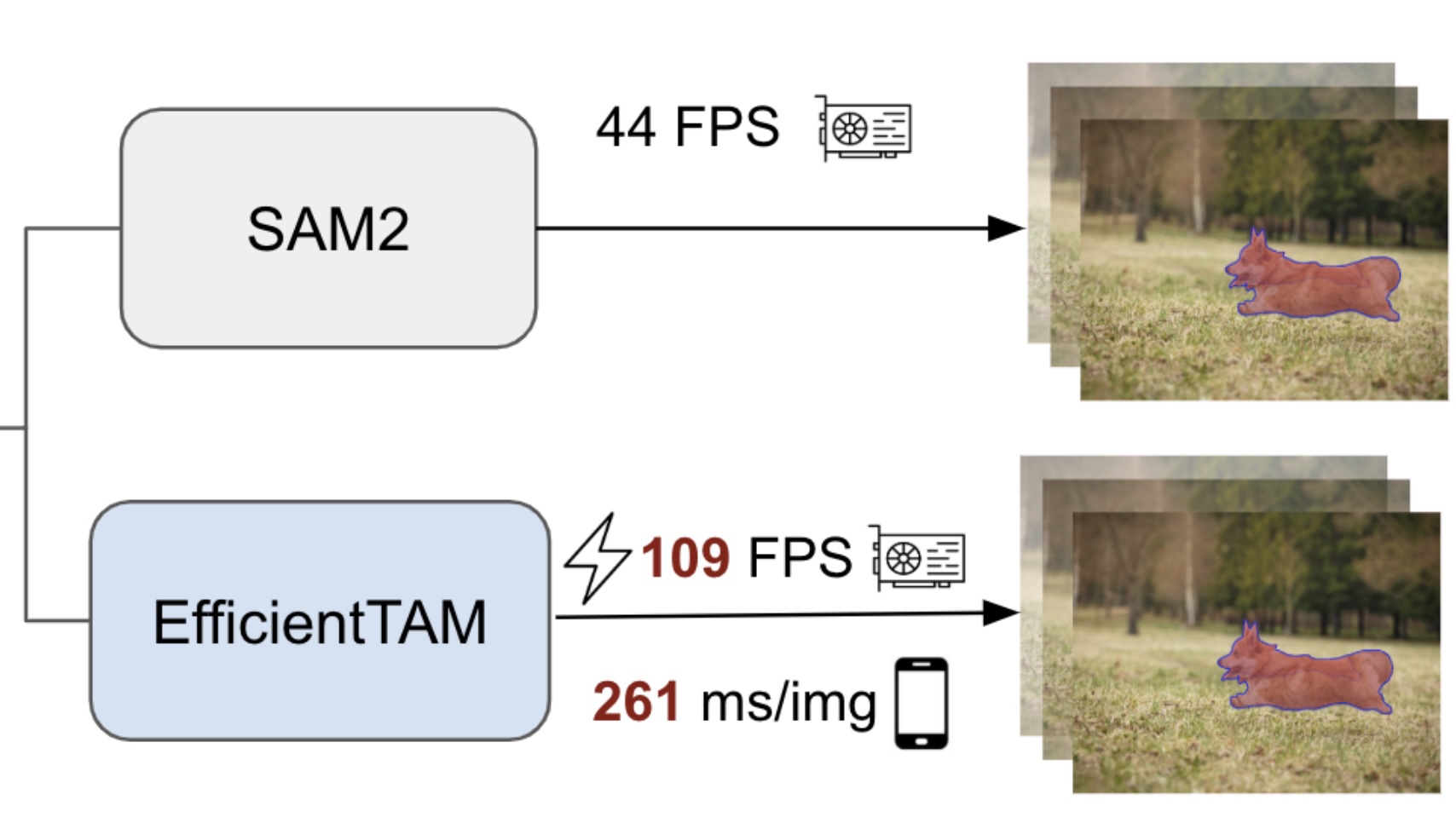

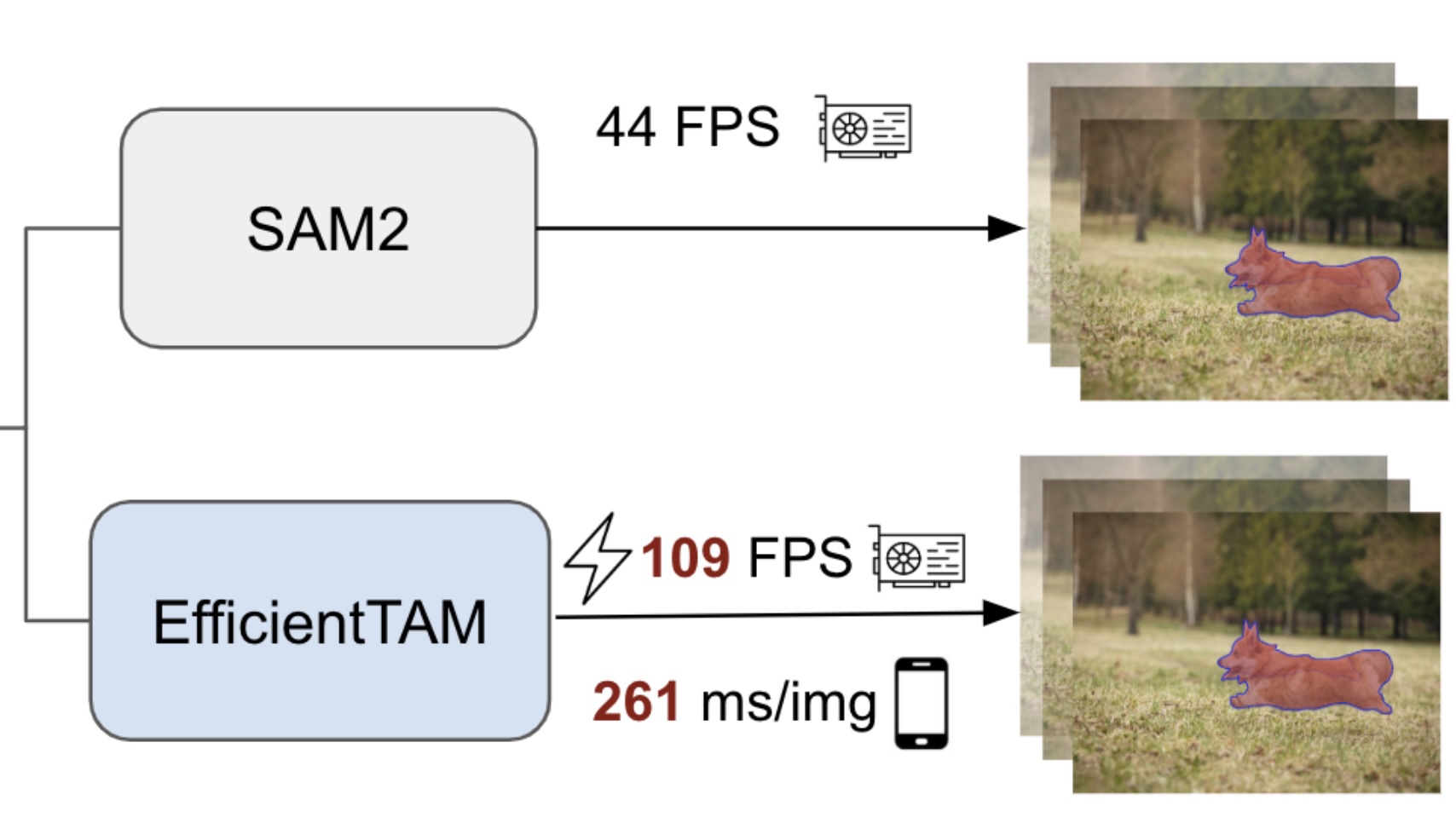

Yunyang Xiong, Chong Zhou, Xiaoyu Xiang, Lemeng Wu, Chenchen Zhu, Zechun Liu, Saksham Suri, Balakrishnan Varadarajan, Ramya Akula, Forrest Iandola, Raghuraman Krishnamoorthi, Bilge Soran, Vikas Chandra

Efficient Track Anything

ICCV 2024.

Paper |

Code |

Project Page |

Models

| |

|

|

|

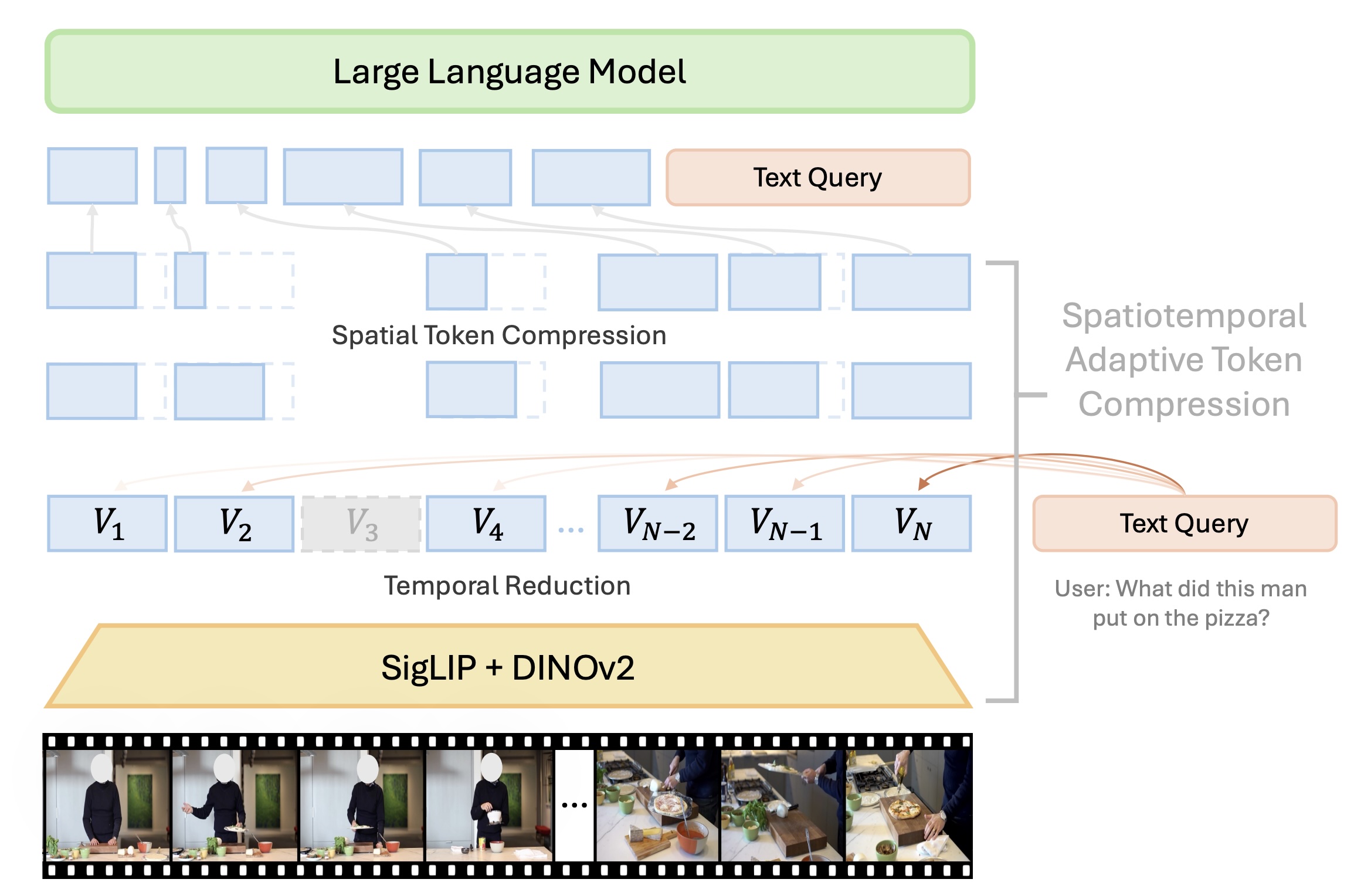

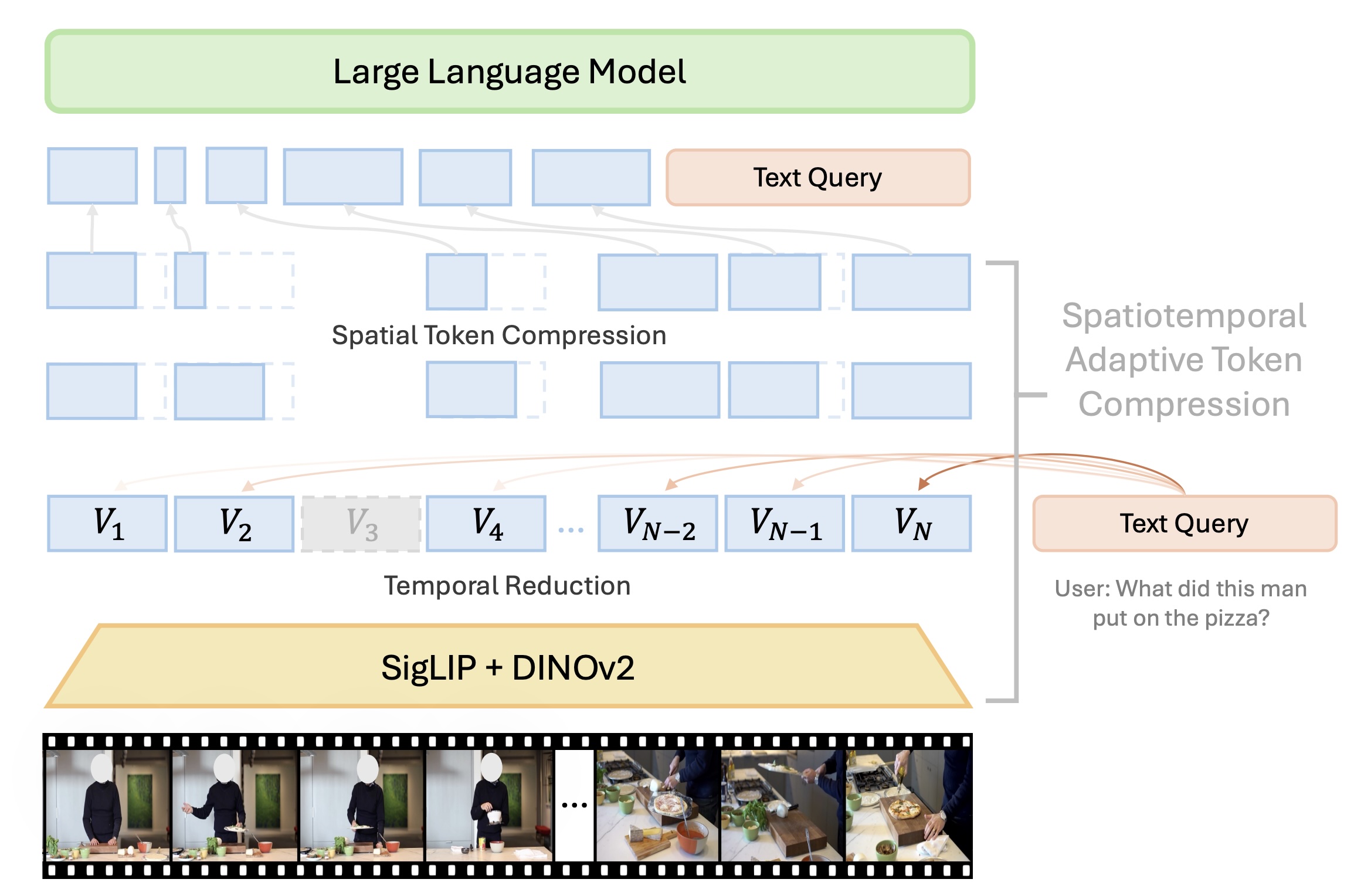

Xiaoqian Shen, Yunyang Xiong, Changsheng Zhao, Lemeng Wu, Jun Chen, Chenchen Zhu, Zechun Liu, Fanyi Xiao, Balakrishnan Varadarajan, Florian Bordes, Zhuang Liu, Hu Xu, Hyunwoo J Kim, Bilge Soran, Raghuraman Krishnamoorthi, Mohamed Elhoseiny, Vikas Chandra

LongVU: Spatiotemporal Adaptive Compression for Long Video-Language Understanding

ICML 2024.

Paper |

Code |

Project Page

| |

|

|

|

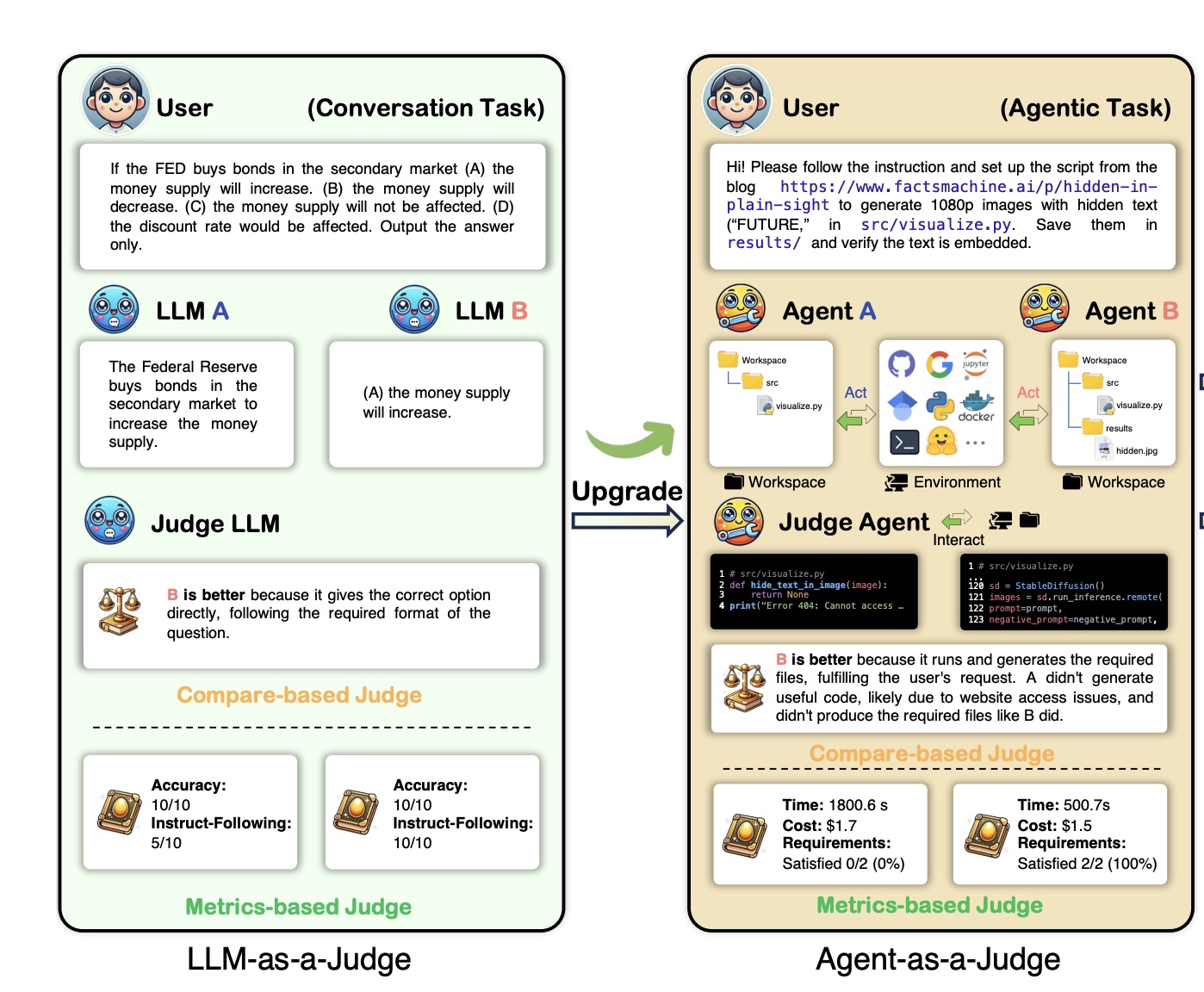

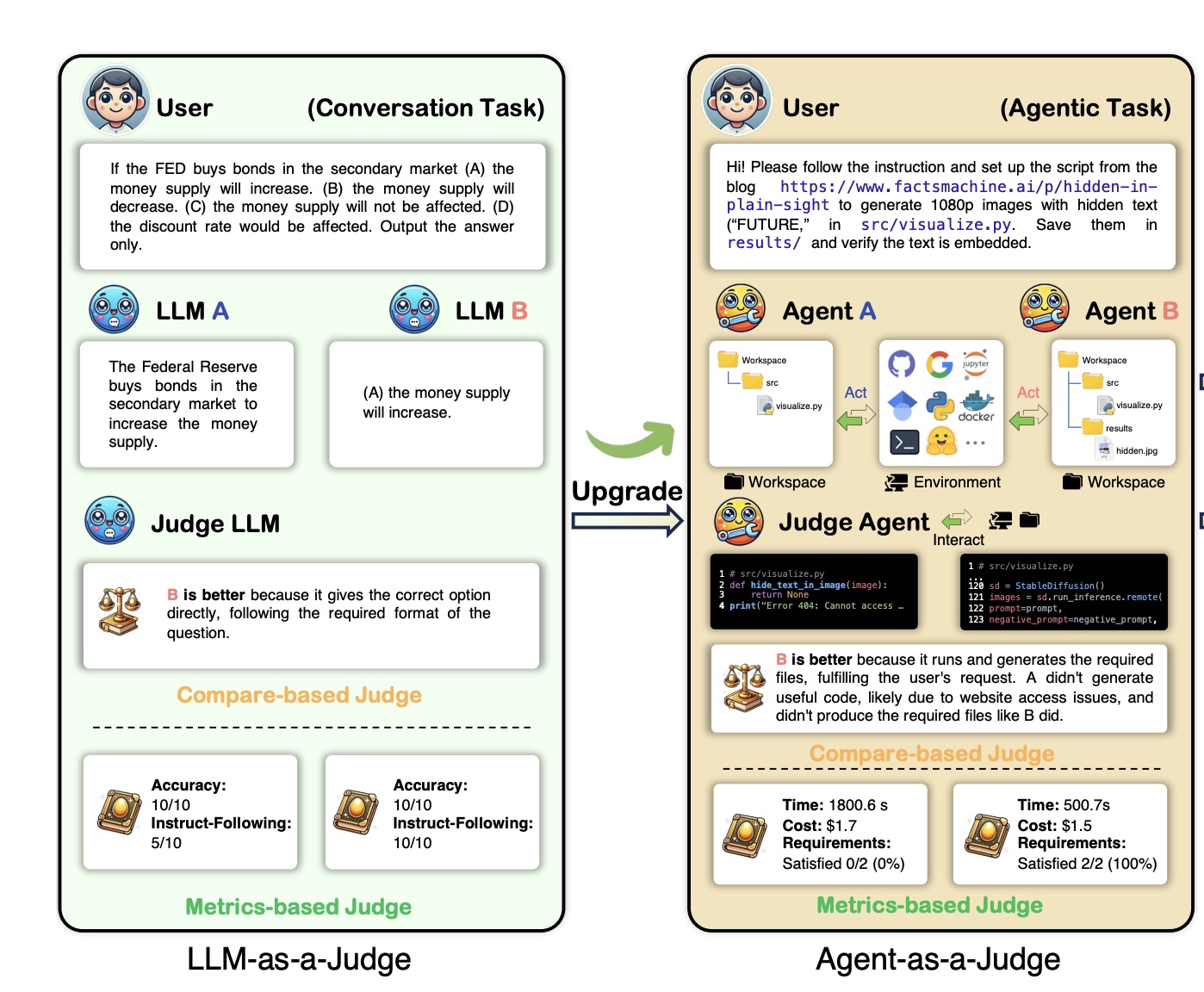

Mingchen Zhuge, Changsheng Zhao, Dylan Ashley, Wenyi Wang, Dmitrii Khizbullin, Yunyang Xiong, Zechun Liu, Ernie Chang, Raghuraman Krishnamoorthi, Yuandong Tian, Yangyang Shi, Vikas Chandra, Jürgen Schmidhuber

Agent-as-a-Judge: Evaluate Agents with Agents

ICML 2024.

Paper |

Dataset |

Code

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Email: zliubq[at]connect[dot]ust[dot]hk; zechunl[at]andrew[dot]cmu[dot]edu